My So-Called Career/1

Preface

In retrospect it is clear to me that my arrival at Salomon marked the beginning of the end of that hallowed institution. Wherever I went I couldn’t help noticing, the place fell apart. Not that I was ever a big enough wheel in the machine to precipitate its destruction on my own. But that they let me… in the door at all was an early warning signal. Alarm bells should have rung.

— Michael Lewis, Liar’s Poker

Michael Lewis’s reflection on his first job on Wall Street is an uncanny match with my own experience in the corporate world. Polaroid, Wang Labs… admired and on top when I first arrived, but dead and gone a decade later. The time to work at those places was five or ten years earlier, but back then I doubt they would have had me. Like Lewis, despite my Princeton/MIT credentials I was nevertheless, by any reasonable standards, unemployable, not just at my first job but hardly qualified for every subsequent position as well. My 10+ years of physics education somehow never was called into use. Nor was I skillful in corporate politics and “managing up”, but I was able to learn by doing and eked out some measure of success. The first time I felt sure of myself was fourteen years later, when I became an industry analyst at a small firm in Boston, and even more so when I quit four years after that to do it on my own and took half my clients with me.

That was 30 years ago. Until recently, Junell was always asking, “Are you retired yet?” When you work out of your house, it’s hard to say, but now I most definitely am. This year I sold the business – my books and training, websites, trademarks, and associated intellectual property – so there’s nothing to do now. When I go to my desk every day, it’s just to trade stocks and figure out what to do with my new personal website. So if there is a story to tell about my so-called career, now is the time to tell it.

When people would ask what I do, Junell would say something flattering but not at all how I would describe it. My business was never something anyone would care about, and it changed over time. I’ve been a scientist, marketer, engineering manager, industry analyst, consultant, author, and trainer. For the past dozen years, I’m probably best known for the books and training under the trademark “Method and Style”, teaching people how to model their business processes in a diagramming language called BPMN and their operational decisions in a similar language DMN. Not how to make their processes and decisions faster or better, just how to communicate their logic clearly in these diagrams. Hard to believe one could make a living doing that, but there you are.

Method and Style is so far removed from what I did in the first 30 years of my working life, I still wonder how on earth did I wind up doing this? Junell says I did it by continually reinventing myself, but I don’t see it that way at all. Although I bounced from one thing to the next, each step in my career was always connected to what I did previously. I never felt I was starting over.

I’ve worked in technology my whole career, and it still amazes me how primitive it was when I began. When I started, PCs did not exist, or email, or the web. No word processing or cell phones. We used slide rules not calculators. I don’t think a younger person today could even imagine it.

I’ve had the good fortune to work or study with many famous men – Nobel-winning physicists (Rai Weiss) and almost winning ones (Victor Weisskopf, David Wilkinson), brilliant inventors (Edwin Land, An Wang), famous photographers (Minor White, Ansel Adams) – but I never accomplished anything truly great myself. I’m not famous. I don’t have a Wikipedia page. I don’t give TED Talks. If you Google my name, at least I’m the first entry that shows up.

On the other hand, I managed to stay married for over 40 years, raise two happy and successful kids, and live in a beautiful house in California. I’m content with that.

Bruce Silver

Pasadena, November 2024

Preface 2

It’s taken a while to get this website together, and a lot has gone down since I first wrote this tale. Junell’s health began failing in the fall of 2023 and never got better. She died in June of this year, and I’m not fully recovered yet. In the midst of all that, a huge fire burned down most of my Altadena neighborhood in January. Our house was spared, but we had to move out for ten weeks while they repaired the smoke damage and the water supply.

So I’ve been alone now for five months and have spent a lot of time reflecting on my life. Not so much on my career, my professional life, but my personal life, my real life, which I realize now I should have paid closer attention to. I did write a companion piece, My So-Called Real Life, as a remembrance of those 45 wonderful years with Junell. But in addition, I now see this piece as part of my legacy to my family and friends. I’m almost 78, and I don’t have that many years left. So I need to go through this again, maybe take out unnecessary detail, so people might actually read it.

I mentioned in the first Preface that my career began in the pre-technology age: no PCs, no cell phones, no internet. It’s hard to imagine today. It was a world run by people doing things the old fashioned way, by hand, and very slowly. And after a brief dabbling in consumer electronics and electronic photography, the last 40 years of my career were all about changing all that through business automation.

Until the 1980s, business ran at the speed of paper. You had to type it, print it, file it, retrieve it, put it in the mail. Unimaginable today. At Wang we put the paper into the computer by taking a picture of it, so you could at least file and retrieve it electronically and email it within the company. You couldn’t email it outside the company, although I tried; that would have to wait for the internet.

And once we had internet and web services, businesses could automate their business processes end to end. I got involved in standards to allow that to happen. Actually, most people used those standards just to document and analyze how their business processes worked; they weren’t ready to automate yet. And the standards weren’t really oriented to those people, so I developed methodologies to make them more usable and wound up doing training around the world on that.

And after that my attention turned to automating decision logic within those processes, again using business-oriented standards. That took me up to retirement, and now everything is being hyper-automated using AI. We’ve gone from needing human labor for every little thing to not needing human labor for anything at all. So yes, business automation has truly changed the world… but are we better off for it? It’s not something we ever thought about.

Bruce Silver

Pasadena, November 2025

Student

Princeton

Growing up in a Southern California beach town, I was looking for a college experience that was as different from that as possible. At Princeton, I certainly got it. Back then, Ivy League was not something Californians aspired to. When I entered in 1966, the school was just as you might imagine: all male, mostly white, Southern, preppy, far closer to F Scott Fitzgerald’s Princeton than to the Princeton of today. Compared to, say, Harvard or Berkeley, Princeton stood out for the way professors interacted with undergraduates. Even in the introductory freshman courses, the sections, small groups where they went over the homework and discussed related topics, were taught by tenured professors not grad students. On the other hand, unlike MIT, where I went later, Princeton did not encourage undergrads to plunge into original research until their senior year. In fact, for the first two years you were required to study such a wide range of subjects – English, history, social sciences, music, a foreign language – that there was no time to immerse yourself in your major.

In the ‘60s, the LA public schools – before busing and Prop 13 – were outstanding and had prepared me well for college. At Princeton, most of the Exeter/Andover types were overwhelmed with the volume of freshman coursework, but it was pretty much what I was used to.

For exams there was an honor system, no proctors in the room to prevent cheating. Instead, on the exam booklet each student had to write, “I pledge my honor as a gentleman that during this examination I have neither given nor received assistance.” I never observed any cheating, but about 40 minutes into my first freshman physics midterm, a student in the front row suddenly jumped up and shouted, “I can’t do it!” Then he pulled out a gun, aimed it at his head, pulled the trigger, and fell to the floor. A minute later the door opened, another fellow came in and dragged out the limp body by his shirt collar. That was my introduction to Princeton exams.

My choice to major in physics had a huge impact on everything that followed, but if you ask why I did that, I couldn’t tell you. Because it was challenging and I was good at it, I suppose. In my high school, seniors were able to enroll half time as freshmen at UCLA, where I took calculus and physics. On one of the physics midterms I got the highest score, so I was feeling confident. The thing I liked about physics was it does not require memorizing lots of details. You just need to be able to conceptualize the problem as a model, a simple diagram, apply some basic principles to it, and the answer comes out.

I still do it that way. That approach has served me well over my entire career, almost none of which involved the practice of physics. In fact, the thing I am best known for today, called Method and Style, is based on conceptualizing complex things as models. In the work of a real physicist, of course, modeling just gets you to square one, which is inevitably followed by a painful slog through problem after problem. I managed to figure that out eventually.

My dad was an aerospace engineer at TRW Systems, and he got me a summer job there in 1966 and 1967. My job title was Junior Computer. In those days, “computer” was a job title for a person. As in the movie Hidden Figures, must computers were women, except at TRW they called them “computresses”. It was mostly a clerical job, graphing calculations for the engineers, who were working on either the space program or military projects. Also like the movie, the engineers were not very nice and looked down on “the help.”

This was before personal computers, even before electronic calculators. Most calculations were done with slide rules. By the second summer, I was able to spend a little time on a real computer, time sharing from a terminal, essentially a clunky electric typewriter with a roll of one-inch paper tape, hooked up via a modem to a mainframe computer somewhere. I could write simple calculation programs in BASIC and run them. I still had to draw the charts by hand. The programs were stored using a pattern of holes punched in the paper tape. Incredibly primitive. But that level of technology is what put a man on the moon!

At the end of sophomore year Princeton students had to declare a major. At age 19, who has any idea of how to make that choice? I chose physics, the path of least resistance. I didn’t realize it at the time, but the strength of the Princeton physics program – then tops in the country, along with MIT – was astrophysics. Looking back, I realize I had a lot of contact with the professors in that group, but I was too young to appreciate how special they were. For example…

Gerard O’Neill, my preceptor in freshman physics, pioneered the idea of human settlement of outer space. This is from his Wikipedia page:

O’Neill became interested in the idea of space colonization in 1969 while he was teaching freshman physics at Princeton University. His students were growing cynical about the benefits of science to humanity because of the controversy surrounding the Vietnam War. To give them something relevant to study, he began using examples from the Apollo program as applications of elementary physics. O’Neill posed the question during an extra seminar he gave to a few of his students: “Is the surface of a planet really the right place for an expanding technological civilization?” His students’ research convinced him that the answer was no.

Bob Dicke, chairman of the department, was one of the first to propose that the universe began as a Big Bang. Moreover, he set out to prove it by detecting its remnants as microwave background radiation. In fact, together with another Princeton professor, David Wilkinson, Dicke used an instrument of his own design, the Dicke radiometer, to measure it. As luck would have it, Dicke and Wilkinson were scooped by two Bell Labs scientists, Penzias and Wilson, who discovered the cosmic background radiation by accident when they were tracking down the source of noise in their microwave towers. Dicke’s team confirmed the measurement a couple weeks later and provided the theory explaining it, but naturally it was Penzias and Wilson who got the Nobel Prize. Another giant of physics was professor John Wheeler, who single-handedly revived the study of general relativity and is credited with first use of the term black hole to describe the singularities in space-time that it predicted.

To ease students into their major, Princeton required them to do two term papers in their junior year. This was library research, not lab work. In the fall term, I wrote about Max Planck and the discovery of the quantum in 1899. In the 1890s, based on the success of thermodynamics and electromagnetic theory over the previous 50 years, physicists believed that all the fundamental questions of physics had been solved. But today we just call those successes “classical physics”. Planck’s discovery of the quantum, along with Einstein’s theory of relativity around the same time, created an entirely new foundation for physics in the 20th century.

For the spring term, my junior paper was in astrophysics for professors Partridge and the previously mentioned Wilkinson. The topic was pulsars, recently discovered strange objects far out in space that emit intense flashes of light at a precisely constant interval. [1] It turned out that they are rapidly rotating neutron stars, a form of matter predicted by theorists but never before observed. A neutron star is formed when a large star burns out, collapses under the weight of its own gravity, and explodes in a supernova. If the star is sufficiently massive, the gravitational pull of the matter that is left is enough to squeeze the electrons of each atom inside the nucleus, a neutron star with the mass of the sun but only 6 miles in diameter. Even heavier stars continue to collapse into a black hole.

[1] Although Cambridge radio astronomers Anthony Hewish and Martin Ryle were awarded the Nobel Prize in 1974 for the discovery of pulsars, it was actually Hewish’s female grad student Jocelyn Bell that made the discovery, and leaving her off the prize was roundly condemned by other physicists. Over 40 years later, Bell was belatedly recognized with a $3 Million Special Breakthrough Prize in Fundamental Physics… which she donated entirely to assisting female, minority, and refugee students in becoming physics researchers.

Going through my files, I found that junior paper:

From professor Partridge: After reviewing the junior papers and senior theses I’ve read in the past four years, I consider this the best I’ve seen. We would like a Xerox copy for the group – charge it to account xxx. 1+

And from professor Wilkinson: An outstanding job in all respects! Paper is very well organized and written. The amount of reading and research in this review is impressive. A complete and comprehensive summary…. A find piece of independent work. 1+ in my opinion.

In those days Princeton grades were 1 through 7, 1 being the best, so a 1+ was even better than that. So yes, I was a very good student. I had been invited to continue working with the top scientists at the top university in that field. Had I done so, I could have had a successful career as a real physicist. But for whatever reason, astrophysics didn’t excite me. I passed on that opportunity.

What Is Physics, Anyway?

At this point I probably need to say a bit about what physics is about, since few people outside the field have any idea. I certainly didn’t have a clear idea myself. At its essence, physics tries to understand the fundamental laws of nature. What is matter composed of? What are the forces that act on it? Underlying those questions is the belief that nature’s laws are universal and described by mathematics. Theoretical physics is the math that explains those things. When I say math, I don’t mean computational math like calculus and differential equations, which I could do. The math used in modern physics is things like group theory, topology, and the geometry of 17-dimensional space. I couldn’t wrap my brain around that, so I had to focus on the experimental side.

Experimental physics observes physical phenomena and compares the result with existing theory. If your experiment reveals a discrepancy, it’s very exciting. You could say that all experimental physics comes down to one basic method: You smash some form of matter or energy into a target and see what bounces off. Astrophysics is even simpler since nature is doing the smashing; you just have to observe what bounces off. That’s all experimental physics tries to do.

Classical physics – Newton’s law and Maxwell’s equations, nature’s laws as they were understood before 1900 – describe well the phenomena observed in everyday life. In the 19th century we knew that matter was composed of atoms and it interacted via two forces, gravity and electromagnetism. But as we tried to go further, to understand the components inside an atom and how those components interact, classical physics no longer worked. We needed a new language, quantum theory, to understand the experimental results. In order to probe things that are very small, like what is inside an atom, you need the smashing to be done with very high energy, i.e., particles moving near the speed of light. That’s also where the second bit of 20th century physics comes in, relativity. Quantum physics and relativity give the same result as classical physics within the realm of everyday experience – bodies larger than an atom and not moving close to the speed of light – but they also explain things like what’s inside an atom or what was the universe like when it first began, was very small and moving very fast.

As you try to probe smaller and smaller you need higher and higher energies. Today that means atom smashers miles in diameter and costing so much to build and maintain that a single country cannot afford to do it alone. The US gave up twenty years ago. In fact, the most fundamental physics experiments are done in only one place, CERN in Geneva. Discovery of the Higgs particle at CERN a few years ago validated the Standard Model, the current theory that explains all the most fundamental elementary particles – quarks and gluons, leptons and neutrinos – and the forces between them. Unifying gravity (general relativity) with the Standard Model is yet to be done. A lot of money has been spent at CERN on that over the past decade, so far with no breakthrough results.

That’s basic physics. Although its experiments are expensive, it offers absolutely zero practical benefit to mankind. The benefit is only aesthetic, the same as a beautiful painting or symphony.

Then there’s applied physics. It’s not about fundamental discoveries, but its theories and experiments are what has led to practical innovations like the laser, MRI imaging, and semiconductors. It’s much more important to mankind, and actually more interesting, but in the academy it’s definitely second-tier.

Senior Thesis

At Princeton, the senior thesis is an intense year-long project involving original research. Mine was called “Infrared Absorption in Dilute Alloys.” The basic theory says that metals that have low electrical resistance are good reflectors of light. When you add a small amount of impurities to the metal – which is what a dilute alloy means – the theory says a virtual energy state is created that should absorb light at a wavelength related to the energy difference between the virtual state and the conduction band in the metal. This theory was the work of Philip Anderson of Bell Labs, who joined the Princeton faculty a few years after I left and later won the Nobel Prize.

My experiment extended the work of a grad student, who designed the apparatus. My result basically supported the theory, but it wasn’t very significant. Compared to the work undergraduates did at MIT, where I went later, it was kind of a joke.

Biophysics

In 1969, at the start of my senior year, physics moved from ancient Palmer Lab to a modern new building, Jadwin Hall. The dedication of the building featured an address by the eminent mathematician and theoretical physicist Freeman Dyson. Dyson was not even at the University but at the Institute for Advanced Study next door. In physics he is best known for the theory of quantum electrodynamics (QED) – the application of quantum mechanics to electromagnetism. Dyson unified two competing QED theories and proved they were equivalent. Although he contributed many breakthroughs in diverse areas of both mathematics and physics, Dyson never won a Nobel Prize, about which he later said, “If you want to win a Nobel Prize, you should have a long attention span, get hold of a deep and important problem, and stay with it for ten years. That wasn’t my style.” Today his daughter Esther, who was the top industry analyst and venture capitalist in the early days of the personal computer, is probably better known.

In his dedication, Dyson expressed the same view that Planck’s contemporaries had back in the 1890s, that now with the unification of QED and the nuclear weak force, the fundamental questions of physics had all been answered. [2] He thus recommended that aspiring students not waste any more time on fundamental physics but instead focus on the next frontier, biophysics. He told the story of Max Perutz, a chemist working in the physics lab at Cambridge studying X-ray diffraction of proteins. His professor, Lawrence Bragg, had shown that if you could create a crystalline form of some molecule, the pattern of X-rays scattered from that crystal could reveal the molecular structure. It was this technique in that same lab that had uncovered the double helix structure of DNA. Perutz used it to discover the molecular structure of hemoglobin. Dyson was insistent that the next round of great discoveries in physics lay at its intersection with biology.

The story made a strong impression on me, and I decided to give biophysics a try in grad school.

[2] Just like Planck’s contemporaries, Dyson could not have been more wrong. Discoveries at CERN just three years later led to a new theory called quantum chromodynamics (QCD), explaining quarks and the nuclear strong force, unifying the theory of all the forces in nature. Now known as the Standard Model, it successfully predicted all of the elementary particles, several not yet discovered. It was not until 2012 that the last of these, the so-called God particle or Higgs boson, was found using the brand-new Large Hadron Collider at CERN.

The Cold War

One last thing before we leave Princeton behind is the role that the Cold War and its ugliest manifestation, the Vietnam War, played in both physics and my career path. For physics, the Cold War was a godsend. When the Soviet Union beat the USA to space with Sputnik in 1957, it unleashed a torrent of government money to support basic research and education through the National Science Foundation (NSF). The NSF not only provided me scholarship money and subsidized my student loans, but effectively financed all university research in physics. Because of the Cold War, the 60s were boom times for physics departments and students alike.

On the other hand, there was Vietnam. The war lasted from 1964 to 1973, and military service was not voluntary. There was a draft, although if you were in college your service was deferred until graduation. The war was built on lies and stupidity. Over 50,000 Americans died and countless more Vietnamese, for no purpose. Students were overwhelmingly against the war. In the face of protests, President Johnson at the last minute announced he would not run for re-election in 1968. Nixon said he had a secret plan to end the war and he was elected, but it was all a lie. The student protests escalated in 1969-70, and I was involved in that. I mean, who wasn’t?

In December 1969, as I was entering my final semester at Princeton, they changed the draft to a lottery system, based on your birthday. It was expected that numbers up to 140 or so would be drafted. Higher than that you were safe. My number came up 009, draft fodder for sure. It was a sickening feeling, and my options were few: Go to Canada, which a lot of people did; resist and go to jail, which fewer did; fake a medical condition, like Trump; or just be drafted. There was one more option: join the despised ROTC and continue on to grad school. That meant Basic Training, some ROTC classes, another training camp, and after two years a commission as second lieutenant. That came with a two-year active duty obligation, deferred until completion of my graduate degree. I was quite sure the war would be over by then, so even though it made me feel unclean, this was the option I chose.

Nixon said he was ending the war, but in April 1970 he escalated it instead, invading the neighboring country of Cambodia. Campuses all over the US erupted in protest. A week later, four students were shot and killed by National Guard troops at Kent State, and later several more at Jackson State. Students at Princeton and everywhere else went on strike. What did that even mean? It was a month before graduation. My classes were over, but I did decline to take the Comprehensive Exam, which determined honors at graduation. Instead I went to Hartford to campaign for an antiwar priest running for Senate, Joe Duffy.[3] Duffy lost to a Republican, Lowell Weicker, who later turned out to be a key figure in Nixon’s impeachment, a good guy after all.

In June I returned to school for graduation. My parents came – they were divorced a few months later – and my grandparents, too. Princeton gave honorary degrees to Bob Dylan (he wrote a song about it!) and Corretta King. Although I had forgone graduation honors, I did win a Phi Beta Kappa key, which I gave to my grandfather, who was so proud of it. I was extremely sad to leave Princeton.

In physics, a bachelor’s degree is nothing, and there really aren’t master’s degrees. It’s PhD or nothing. For me, it had come down to Stanford or MIT. I chose the latter, not for any good reasons. For sure, my career would have taken a different path had I chosen Stanford.

In my senior year quantum mechanics class, the professor told the story of Louis de Broglie, a French nobleman who was also a physics grad student in the 1920s, the early days of quantum theory. It was known then that light waves under certain conditions behaved as particles. In a flash of insight, de Broglie postulated in his PhD thesis that electrons, which were particles, under certain conditions behaved as waves. He quickly wrote this up in a five-page dissertation, for which he was awarded the Nobel Prize in 1929. “The Nobel, sure he deserved that,” said my professor. “But it was not enough for a doctoral dissertation.” That required more than inspired insight, but learning the craft of physics through years of hard labor, a lesson I was to learn very soon.

At any rate, the ROTC deal sealed my fate. I was committed to complete my PhD no matter what. In July of 1970 I headed off to Fort Knox for 6 weeks of US Army Basic Training.

[3] Weird coincidence, but Duffy’s campaign manager was Mike Medved, who I knew from my high school, where he was a very brainy left-winger. He later had some kind of religious conversion and became a right-wing talk radio host in Seattle.

MIT

When you’re in your 20s, Cambridge MA is the best place in the world. For sure it was the best thing about MIT. Unlike sprawling, bucolic Princeton, the MIT “campus” looked more like the Pentagon, a network of connected industrial-style buildings identified by number not by name. On the other hand, a few minutes off campus you could be sailing on the Charles or sipping capuccino (even in 1970!) in Harvard Square. Physics at MIT was a lot different from Princeton, much more hard-core. Undergraduates were strongly encouraged to get outside the box of classroom learning and join a research group, do experiments. Many of the grad students there had also been MIT undergrads. I was already starting from behind. The first year or two of physics graduate study at MIT is mostly coursework, which was quite a step up in difficulty from Princeton. The professors, I have to say, were excellent. For grad students there were no sections to help you along. It was sink or swim.

In the summer of 1971, everyone in Cambridge was a hipster, but I had just gotten back from the Army and looked it. Needing a place to stay until classes started, I sublet the room of Rosemary Trowbridge, who was flying off to San Francisco for a month. After she returned, we wound up living together in Harvard Square until 1977.

General Exam

Toward the end of your second year at MIT, following intense review of everything you ever learned about physics – and lots of things you never were taught at all – you take the Generals exam, a brutal two-day affair. The questions mostly aren’t about testing your memory, but rather your ability to think like a physicist. If you pass, no more courses are required; you just work full time on your dissertation. If you fail, you wait a year and try again. If you fail a second time, they give you a Master’s and kick you out of school. That happened to one guy in the research group I was in. Like most people that suffered that fate, he went on to Harvard Business School. Even though The B School was a surefire ticket to riches in the corporate world, in MIT physics that path was considered a disgrace and abject failure.[4]

[4] Observing that was my introduction to the academic mindset, which was especially strong in physics at MIT: Of course, you need to have a PhD; anything less doesn’t count. Then you become a postdoc and soon a physics professor at some top-rated university, where you are admired for your brilliance and you strive for a Nobel Prize. Anything else is failure. At MIT we bathed in this toxic thinking every day.

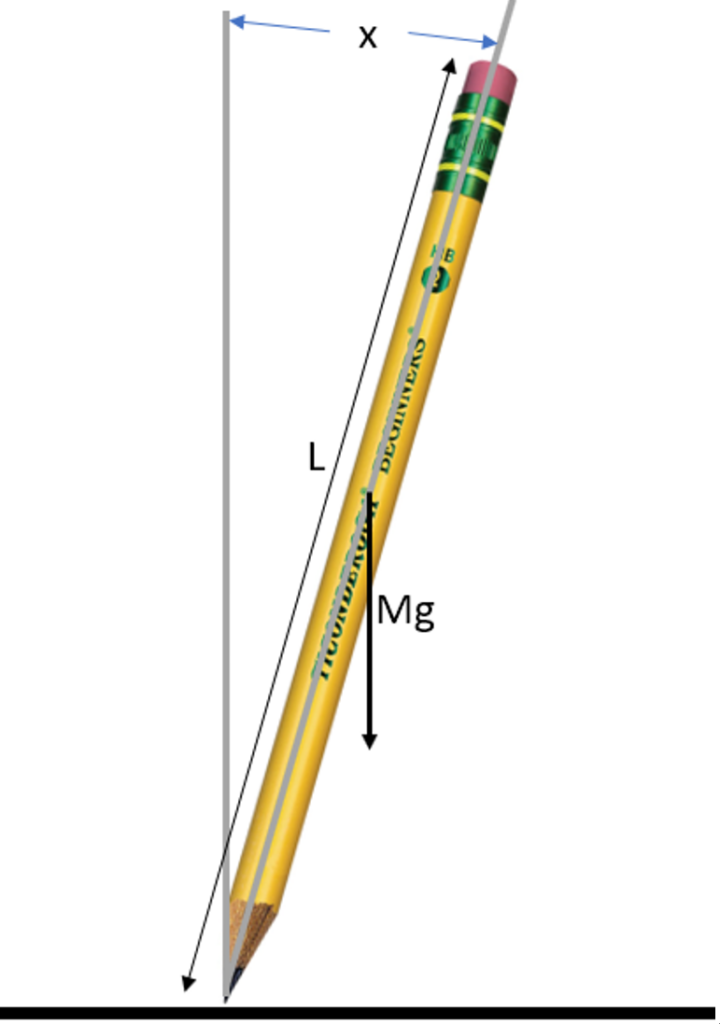

I took the Generals in the spring of 1972 and got the second highest score. There was one question, though, that looked easy but I just could not figure out. For years afterward I would occasionally remember the question and still could not solve it. Then, unbelievably, about five years ago, having left physics for four decades and forgotten all I ever knew about it, the answer came to me in a dream! Here is the question:

A pencil of length L and mass M is balanced on its tip. How long does it take to fall?

In 1972 I got hung up on the fact that it depends: if it’s perfectly balanced it will never fall. But that’s a lame answer. Zero points for that. You need to visualize it as a simple model. Draw the picture. Suppose it starts at a tiny angle x. From there it’s just freshman physics, Newton’s law F = ma. When you work out the differential equation, it’s

d2x/dt2 = k x,

where x is the angle from vertical and k is the positive constant 3g/2L (g the acceleration of gravity). That’s a really simple equation, freshman calculus. But it’s one you NEVER see in physics. What you see in physics is

d2x/dt2 = -k x,

where the multiplier of x is negative. It’s the equation for a harmonic oscillator, like a pendulum, a vibrating string, any kind of wave. Almost everything in physics comes down to harmonic oscillator. But not this.

If you just work out the equation, you get the answer,

time = sqrt(2g/3L)*log(π/x0)

where x0 is the initial very small angle. As x0 goes to zero – perfectly balanced – time goes to infinity, as it should. I worked this out again just now. I mention this story because it shows that even though you can forget all the details of physics, the reasoning process stays with you. Forever, I guess.

Teaching Assistant

To pay for tuition I was a teaching assistant the first three or four years. One year I was the “apparatus guy” for the freshman physics lectures of Victor Weisskopf, chairman of the department and a giant in the world of physics. He did his postdoc with Heisenberg, Schrodinger, Pauli, and Bohr – the very founders of quantum mechanics. During WW2 he was group leader of the theoretical division of the Manhattan Project at Los Alamos. He later started the Union of Concerned Scientists working to stop proliferation of nuclear weapons.

From his Wikipedia page:

“One of his few regrets was that his insecurity about his mathematical abilities may have cost him a Nobel prize when he did not publish results (which turned out to be correct) about what is now known as the Lamb shift.[5] … At MIT, he encouraged students to ask questions, and, even in undergraduate physics courses, taught his students to think like physicists, not just to learn physics.

Yes, exactly.

[5] You see these guys are always thinking about the Nobel!

If you ever took freshman physics, you know there’s a big lecture hall where the prof scribbles equations on the blackboard and does lots of cool demonstrations: pendulums swinging, target shooting, lightning bolts from vandeGraaf generators, that kind of thing. I was Weisskopf’s apparatus guy. My job was to make sure the apparatus for each demonstration was working properly, and if not, fix it. It was a fun job, and doing it for Viki Weisskopf, that was special.

My girlfriend Rosemary taught sixth grade and was into science for kids. One of the projects she did in her class was make a motor out of a length of insulated wire, two paper clips, a couple refrigerator magnets, and a battery. At MIT I turned that into a contest for the whole class: who could make the fastest motor using those things.

At MIT not everyone was in physics or engineering. There was an architecture department and even a humanities department. However, for some unexplained reason everyone had to take freshman physics. Not wanting to get rid of that requirement but trying to soften the blow, they made the course self-paced with simple pass/fail exams. One year I taught a section. I had to grade the exams and explain to the students – tears running down their cheeks – why they failed. Not everyone wants to think like a physicist. That was not fun.

One year I was TA for Physics Project Lab, a course for juniors in the department where they performed experiments in modern physics. The experiments were sophisticated but canned. The apparatus and instrumentation were all set up. The students just had to make measurements and write it up. Inevitably, though, students would come to me all frustrated: I’ve tried everything, they said, but just can’t get the instrument to work! I would ask, Is it plugged in? Of course! they said. Do you think I’m an idiot? The problem was always that it wasn’t plugged in. This experience served me well later in life also, as tech support at home.

John King

I’ve put it off as long as possible, but I suppose I need to talk about my research project and dissertation, a six-year sentence of hard labor. Following Dyson’s advice, I looked for something in biophysics, which happened to be the new direction for Professor John King. King had the right pedigree. In places like MIT, besides winning the Nobel Prize yourself – admittedly rare – the next best thing is being trained by a Nobel Prize winner or at least having that in your physics genealogy. King was a student of Jerrold Zacharias, who in turn had been a student of I. I. Rabi in the 1930s. Rabi developed the technique of nuclear magnetic resonance to measure the properties of atomic nuclei and won the Nobel Prize for it in 1944. (MRI imaging used in medicine today has its origins in that physics.) The technique used the interaction of a beam of atoms or molecules in a magnetic field. Zacharias used a variation of this technique to build the first atomic clock, which is now in the Smithsonian.[6]

[6] King always maintained that the Smithsonian had labeled the exhibit backwards, the beam source labeled as the detector and vice versa. Many years later I visited the Smithsonian and saw that he was right.

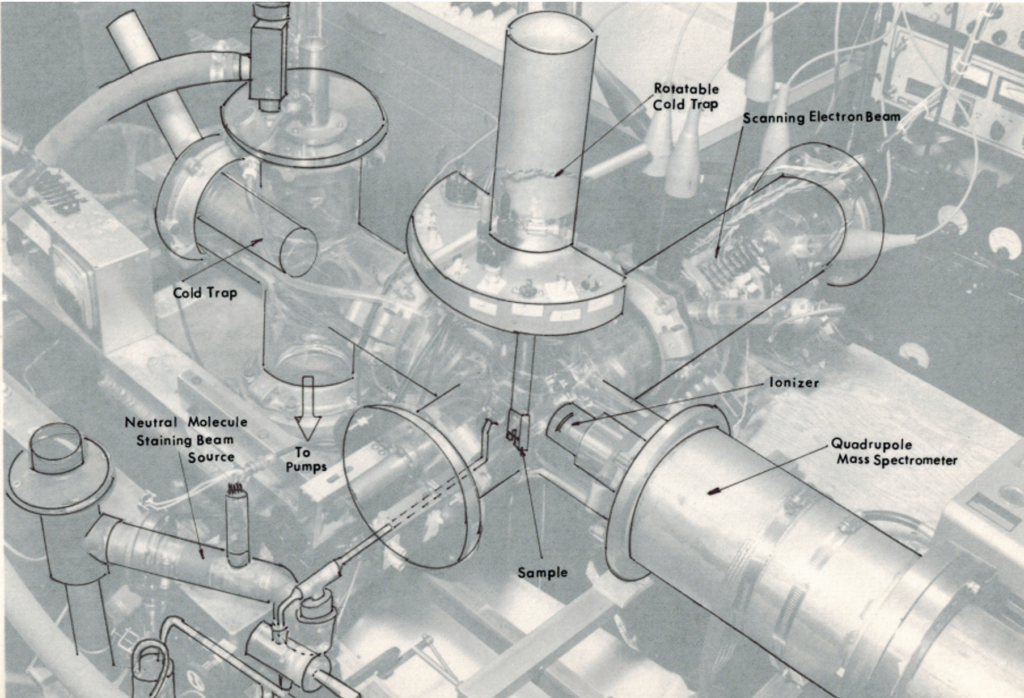

At MIT, Zacharias created the Molecular Beam Lab to continue this technique, and in the 1960s Professor King took it over.[7]

[7] Another Beam Lab colleague of King and Zacharias was Rainer Weiss, who later moved into astrophysics (collaborating with Dicke and Wilkinson at Princeton) and worked in the lab next door at MIT. Rai had the crazy idea in the 1970s that gravitational waves predicted by general relativity could be detected experimentally. Decades later this was achieved. For this, Rai Weiss won the Nobel Prize in 2017.

From King’s Wikipedia page:

“Committed to the interconnectivity of disciplines, he expanded the scope of the lab’s research to include cosmology, low-temperature physics, and biophysics. During his tenure, more than 100 undergraduate and 25 doctoral students earned degrees in those fields. King is best known for his 1960 measurement of the charge magnitude equality of the electron and the proton and the neutrality of the neutron to 10−20 of an electron charge. That experiment, which still graces the first page of most electricity and magnetism textbooks, had been prompted by a conjecture that the expansion of the universe was due to a slight charge imbalance.”

The last part describes a so-called null experiment, where two quantities are exactly equal in theory and measured to be equal, but possibly actually different by a teeny tiny bit. If the null experiment finds such a difference, the theory must be revised, which means a Nobel Prize. Here theory says that the magnitudes of the electric charge of a proton and electron are exactly equal, and King’s null experiment verified that to a part in 1020. It’s an impressive result, but you don’t get the Prize for confirming the current theory. King’s reputation for null experiments that could overturn basic theories meant that we had an endless stream of cranks, kooks, and mischief-makers trooping through the lab touting perpetual motion machines and all sorts of nonsense.

You might ask, what does all this have to do with biophysics? Exactly!! Nothing at all. King knew nothing about biology, but – as Dyson had said – biophysics was supposed to be the next frontier. That should have been a warning sign. I knew nothing about biology or biochemistry myself. I tried to learn about them from books but could not. In fact, to me they were the exact opposite of physics, just memorizing lots of details, not figuring out solutions from basic principles. And it turned out that deep down, I had no interest in them, either. That was warning sign number 2, this time flashing bright red! But not thinking clearly about it, I told myself it would all work out and joined King’s Molecular Beam Lab in my first year as a grad student.

Molecule Microscopy

John King believed in learning by doing. “Make mistakes quickly,” was his motto, which was actually good advice. This is from MIT News on his death in 2014:

His belief that anyone could “find something interesting to study about any mundane effect” reflects the independent spirit of King’s own early and eclectic science education. He told his students that “the best way to understand your apparatus is to build it.”

Be that as it may, building your own apparatus from scratch definitely gets in the way of making mistakes quickly. The good part is you learn to improvise. I learned my way around a machine shop, how to use a milling machine and a lathe, how to solder copper and brass pipe, how to build electronic circuits, that sort of thing. The experience was valuable but not worth the time it took. It probably added two years to my sentence of servitude at MIT. And there was no OSHA back then. We used to wash our hands in acetone and trichlorethylene, wrap soldered pieces in wet asbestos, and suffered no ill effects.[8] But it was all improvisational, not as good as a real machinist, plumber, or electronics tech could do.

[8] Another benefit: In 1997, when we moved from our house in Weston, there was asbestos insulation on some heat pipes in the basement. Rather than hire some bogus hazmat contractor, I just wet it and pulled it off myself.

King’s particular idea in biophysics was called Molecule Microscopy. The goal was mapping the weak forces binding molecules to biological surfaces like cells. Standard instruments like scanning electron microscopes created images by bouncing high energy electrons off the hard parts of a surface but could not directly detect the areas that would selectively bind water or some other small molecule, which was the goal of Molecule Microscopy. That was the idea, anyway.

My dissertation was called “Scanning Desorption of Molecules from Model Biological Surfaces”. Completing it took 5 years, every step a struggle. Ultimately, I think they just took pity on me and let me graduate. The idea really didn’t work, and the experience soured me not only on biophysics but on physics in general.

King’s first idea was a false start that took me years of struggle. When it was quietly laid to rest and I moved on to a different approach, it was a depressing and at the same time hopeful moment. I was beginning the sixth year of graduate school.

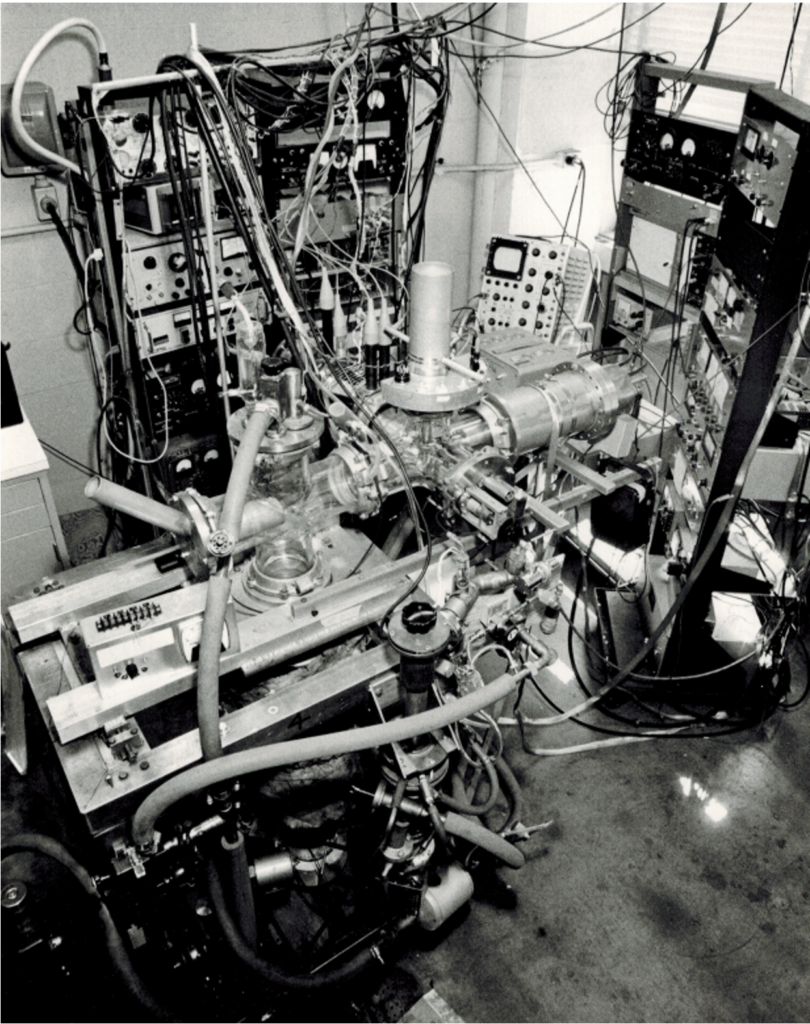

The final experimental apparatus is shown below. Ultimately I was able to get enough data to qualify for my degree in October 1976, although I was never able to measure desorption with any spatial resolution. King’s Molecule Microscopy idea never bore any fruit but did manage to waste the lives of several graduate students. His 2014 obituary in MIT News added this coda:

When inventions such as the molecular microscope were not as successful as he had hoped, King attributed the failure to insufficient effort in combining enough money and skilled personnel. Achieving this winning combination required, in his view, an attack on the problem with “ferocious vigor.” Moderate vigor was not enough.

That’s right, blame it on the grad students. The quote still upsets me.

Photography

I haven’t mentioned yet my favorite thing about MIT, the one thing that actually impacted my career: photography. In the summer of 1971, when I was in ROTC training in Pennsylvania, on weekends I used to visit my ex-roommate Jeff Greene in Princeton, where he had continued as a grad student in architecture. The architecture students had to learn to use the darkroom, and I was fascinated by how that worked. Back in Cambridge, I got a 35mm camera and began to use the MIT Student Center darkroom. I learned how to remove the film and wind it on a spiral reel – all in total darkness – pour in the developer and rock it back and forth for a few minutes, then stop development and “fix” the image, giving a black and white negative. From the negative you would make a positive print in an enlarger. At that point, the room was in dim red light not total darkness. Seeing the image come up, I just loved that! I even sold a print at an art fair for $10. Much more fun than physics!

Then I discovered that the MIT architecture department had a Creative Photography program, and as a student I could take those courses. It was headed by Minor White, a practitioner of Ansel Adams’s Zone System and a giant of photography in his own right. Like Ansel, Minor favored a 4×5 view camera, a large contraption on a tripod where you view the image upside down on the ground glass under a dark cloth. Because the negative is 14 times bigger than a 35mm, you get incredible detail in the photo. Students had to get one and learn to use it with the Zone System.

A photographic print can distinguish 9 zones or levels of gray, each nominally twice the lightness of the level below. Zone 5 is middle gray. Zone 3 is the darkest level that shows clear detail. Zone 7 is the lightest level that shows clear detail. Zone 1 is black, Zone 9 is white. In the Zone System, you have to previsualize the image in your mind to determine which part of the scene should be Zone 3, the darkest level with details, and which part Zone 7, the lightest level with details. You expose the film for Zone 3, two stops down from the average light level, Zone 5. Then – and this is the cool part – you select the right developer and development time to put the part of the scene with the lightest detail in Zone 7 on the print. Today with digital cameras you do this in software, but back in the day it was all chemistry.

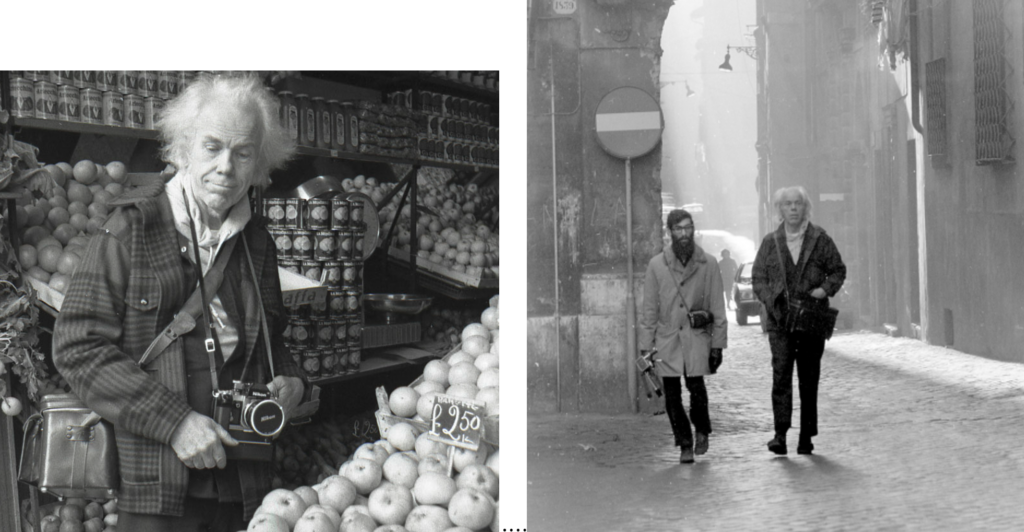

From those courses I also learned about fine art photography and knew about all the photographers, both the currently fashionable and the all-time greats. I would go to the galleries and museums, and we would put on exhibitions at MIT, as well. In between semesters at MIT there was a month-long independent activities period, and for the one in January 1974 the architecture department was sponsoring a photography project in Rome, headed by Minor. Since it was open to any students in the Creative Photography program, I decided to go. That was the best month of my 6 years at MIT. Each student was assigned a location to spend time at – many days, in fact – to make photos (35mm not 4×5). Mine was Piazza del Popolo, just two blocks from the future apartment of my brother-in-law Eddie Orton. It looks much the same today.

My roommate in Rome Steve Slesinger was an undergraduate in electrical engineering. He was a talented photographer, and I still have some of his work up on my walls. We lived in a pensione and traveled around on city buses. You get to know a city pretty well doing that day after day for a whole month. At the end of the trip, after eating in cheap cafeterias, we were excited that Minor was taking the whole group, about 8 of us, out to an Italian restaurant. That turned out to be a natural foods place called Soia Gioia. The cafeteria food was better.

My Military Career

Whatever happened to that bit about the Army? In addition to the ROTC classes, in the summer of 1971 I had to go to another 6-week training camp, this time at Indiantown Gap near Harrisburg PA. Northern Appalachia, Trump country. There they reminded us that the real purpose of all this was to learn how to kill the yellow man.

By then I had a really bad attitude about the whole thing. Most of my platoon was from military schools like Norwich University and Virginia Military Institute. You’d think these guys would be gung-ho types, but most hated the war and the military as much as I did. Unlike me, they weren’t deferred until they finished a PhD. No, they were soon going off to Nam as newly minted 2LTs.

Some of them tried passive resistance to get thrown out. Once when we were marching off to the rifle range, one of them just turned around and marched back to the barracks. On the rifle range, everyone had to qualify as Marksman by hitting pop-up targets, which were human silhouettes (just so you wouldn’t forget). I had the idea that if I missed enough targets and failed to qualify, they would somehow let me out. No. Actually, they made the whole platoon stand at attention in the hot sun while I attempted to qualify a second time. Bad call on my part. To boost morale, the Army offered an optional “merit badge” called Recondo that required you to do a few simple exercises. I refused to do them, even when it would have gotten me out of KP. But it was all for naught. I was still on the hook.

In June 1972, my ROTC training was complete and I received my commission. As I had hoped, by then the war was effectively over. They certainly didn’t need more lieutenants to feed into the meat grinder. Accordingly, as an alternative to the two-year active duty obligation, we were allowed to elect Active Duty for Training (ADT), three months of active duty – deferrable until completion of degree – and an eight-year Reserve obligation, starting immediately and going until June 1980! I went for ADT.

I did the three months at Aberdeen Proving Ground in Maryland in the summer of 1976, after completing the experimental portion of my thesis but before writing it up. It was mostly classroom stuff and by then it was coed. The whole thing was like summer camp.

One minor problem, though: I just couldn’t bring myself to join a Reserve unit, which I was supposed to do starting in June 1972. The ROTC commander would call me in and yell at me to do it right away. One day I did drive down to the Army base in South Boston, but I just couldn’t bring myself to go in. I hoped they would forget about it.

In 1975 I got a fat letter from Dept of the Army, Washington DC. This is it, I thought, busted to private and sent immediately to active duty. No, actually it said that since I had no black marks on my service record, I was promoted to First Lieutenant! Later, when I got out of MIT and started working, there was no chance I would join a Reserve unit, but I still worried continuously about being caught. In 1978, same thing, another fat letter from Washington DC. Three years’ time in grade with no black marks on my record, so promoted to Captain. You gotta love those government HR policies! In June 1980 I received an Honorable Discharge as a Captain in the US Army Reserve.

Is Physics Even a Career Path?

Besides preventing me from bailing out of MIT when it clearly wasn’t working for me, the Vietnam War had another impact on my not-yet-started career. The war basically had bankrupted the US economy. In the 60s it was probably an economic stimulus, but by the early 1970s it triggered inflation and low growth, a combination that proved deadly to the presidencies of Ford, Carter, and probably Nixon too. One result of that was decimation of the NSF budget, which was the lifeblood of physics programs in every university. To save faculty jobs, in 1975 MIT took the controversial step of quadrupling the size of the nuclear engineering department in a $1.4 Million deal with Iran. Those students later became the core of Iran’s nuclear weapons program. In physics, no longer could the average MIT PhD look forward to a faculty job at a comparable institution. It was more like you hoped to get a teaching job at Fitchburg State. This was hard to accept when the MIT mindset was that a job like that, or God forbid anything in private industry, was strictly for losers.

In October 1976 I had completed my PhD, and it was time to get a job. Academic physics was a dead end. There were no jobs there, and besides, I hated it. The other market for physicists was the defense industry, not for me. My dissertation project was a bust. I was not qualified to work doing biophysics. I was really not qualified to do anything. I had the skills and reasoning process of a physicist, but at best you could call me a generalist. In an age of specialists that was not so good. I was almost 28 years old, and I considered myself unemployable.

I got a job interview at Xerox Research Center in Webster, NY, where they worked on the next generation of office copiers. They showed interest in my graduate work and invited me to present it to the scientists there. During my presentation there was suddenly a strange odor and a siren going off. They told me to ignore it, but a few minutes later everyone had to evacuate the building. Selenium accident, everybody out. I hoped that wasn’t my only opportunity.

There arose one more possibility. It was in Cambridge, in fact, two blocks from my lab at MIT. Even better, it was in photography: Polaroid! Some top secret project, Land’s personal baby, but they couldn’t tell me much about it. I must have had an interview, although I cannot recall it. They certainly had no interest in my dissertation. I do remember a poo-poo platter dinner with the boss’s boss at some tiki-torch dive in Norwood, the hinterlands south of Boston. I guess they were looking for a physics generalist. The words MIT, microscopy, photography on my resume may have helped. Against all odds, I had a job! I was almost 28 years old. My job title was Scientist, salary $1700/month, which seemed too good to be true.

And so began the first step in my so-called career.